3.4 GPT hallucinations

🤪 trying to shortcut your research with AI? lol you can't. use legit shortcuts instead. like books by humans, IOW.

I’ve been digging deep into medieval Japan lately. I’m writing a novel set in that time and place, and of course the more you read the more you find. It sprawls, because culture sprawls. I started with a person, which morphed into a family, which morphed into a movement, which morphed into a location, which morphed into a religion, which morphed into a mentality, which morphed into an attitude that you then have to evaluate in terms of social class and economic reality.

But the morphing isn’t always linear, of course. There are tons of side branches; if I were to go down all those roads, this book would never get written. I simply can’t afford to take as long as I would like to do a really thorough job. At that point, this wouldn’t be a novel, it would be a dissertation. And I left that behind many years ago.

So I have to use some shortcuts. And in the past, I felt that chatGPT was pretty efficient as a Google substitute, especially its “deep research” function. Of course, I ask for links and citations on everything. Once I check out the links, I usually find more related material on my own.

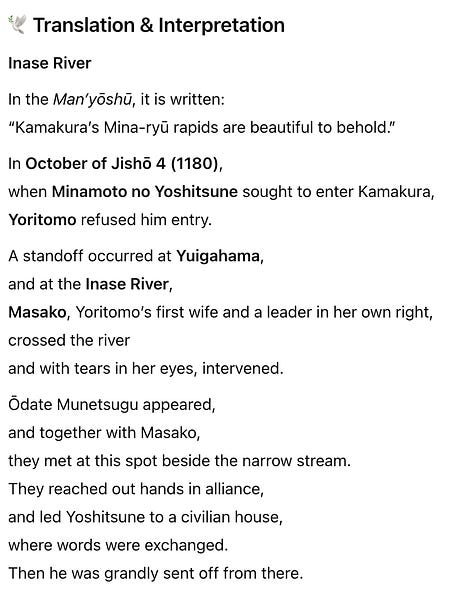

The other day, I was working on reading a memorial stone that was erected in the 1930s, and it occurred to me to upload a photograph and ask for a translation, first in modern Japanese and then into English. I thought it might be faster to start with an AI translation that I could then check over for accuracy.

But I could see right away that something was wrong. It’s a good thing I have the ability to scan text in Japanese, because it was naming people who weren’t in the text and excluding the people who were. In addition, I already knew what the memorial stone was commemorating, so it was weird that the translation made no mention of it.

So I asked—repeatedly—for clarification. And each time, the bot gave me a wild piece of fiction, with incidents that never happened.

Usually, if I ask for clarification, the bot corrects itself. In the past I’ve caught it giving me citations for things that don’t exist. When I point this out, the friendly AI chatbot will jump in with, you’re absolutely right, and correct itself. But not this time. Its translation got weirder and weirder.

Basically, I’ll have to translate the stone myself. And this experience scared me away from using AI to translate anything that I cannot do on my own. I can’t even use what it gave me to speed up my own translation, because it’s wildly inaccurate, just completely made up gibberish. When I think about relying on it to translate Chinese or Korean, which I don’t read—that’s a hard no.

I’m already taking shortcuts by using secondary sources. By doing so, I rely on the professors and independent researchers out there who have done the hard work so I don’t have to. They write in academic journals. They publish books. Sometimes they even publish here on Substack. They’re reading the sources I can’t read and connecting dots among sources I don’t have time to read or maybe that I don’t know exist. They always cite their sources, and I can look at those if I want more detail, or if I’m not sure I follow their arguments or agree with them.

But AI has turned out to not be a secondary source—because it lies when it doesn’t know the answer, and isn’t vetted by anyone but myself. So in the end, I’m doing the work anyway. I might as well just do the work from the beginning and spare myself the aggravation.